Biases and heuristics

1) identify at least one of the biases and heuristics you’ve found to be challenging (in life and/or in the scenario).

2) Next, identify a historic situation from the Latimer reading in which this may have been at work.

3) What would you recommend to reduce the problems caused by your chosen bias or heuristic in the situations you’ve discussed?

Instructions: Your initial post must be at least 250 words. It MUST make reference at least two of the assigned readings (so show you have read them and also to demonstrate your mastery of the content). Make sure you provide parenthetical citations and a reference list. Please respond to at least two other students. Peer responses should be between 100-200 words and contain a reference to at least one of the assigned readings (to help others process the material).

Assignment info:

Required Reading Assignment

We begin this class with a two-pronged approach. First, at the fundamental level, this lesson has you engage in understanding several human biases and cognitive errors. Second, on the historical front, Latimer’s book helps set the stage for understanding deception within the context of conflict within the Anglo-American experience. Together, these two areas of understanding will help provide you a context to better understand deception and information principles for the remainder of this course and professionally.

First, here are the basic psychology readings. Many of these are the first descriptions of these biases and heuristics by leaders in the field. The idea is to not understand all the literature, but to take away an understanding of the specific cognitive terms that you’ll use later.

Frankel, Allan. 2011. “Situational Awareness and Normalcy Bias.” Neil Strauss Blog. (March 1). Accessed January 23, 2017. https://www.neilstrauss.com/survival/situational-awareness-and-normalcy-bias/. (3 pages)

Molden, Daniel. 2014. “Understanding Priming Effects in Social Psychology: What Is “Social Priming” and How Does It Occur?” Social Cognition 32, special issue (June): 1-11. (9 pages)

Nickerson, Raymond S. 1998. “Confirmation Bias: A Ubiquitous Phenomenon in Many Guises.” Review of General Psychology 2, no.2: 175–220. (37 pages)

Tversky, Amos, and Daniel Kahneman. 1981. “The Framing of Decisions and Psychology of Choice.” Science 211, no. 4481 (January): 453-458. (6 pages)

Tversky, Amos, and Daniel Kahneman. 1973. “Availability: A Heuristic for Judging Frequency and Probability.” Cognitive Psychology 5, no. 2: 207-232. https://msu.edu/~ema/803/Ch11-JDM/2/TverskyKahneman73.pdf (25 pages)

Tversky, Amos, and Daniel Kahneman. 1974. “Judgement Under Uncertainty: Heuristics and Biases.” Science 185, no. 4157 (September): 1124-1131. http://psiexp.ss.uci.edu/research/teaching/Tversky_Kahneman_1974.pdf (8 pages)

Turner, Robert. n.d. “Social Influence in Psychology: Theories, Definition, & Examples.” Video. http://study.com/academy/lesson/social-influence-in-psychology-theories-definition-examples.html (7.41min)

Rhodes, Kelton. 2002. “An Introduction to Social Influence.” http://workingpsychology.com/intro.html (1 page)

Cialdini, Robert. 2008. Influence: Science and Practice. 5th ed. New York: Allyn & Bacon. Begin Chapters 1-4. (139)

Latimer, Jon. 2001. Deception in War. New York: Overlook Press. (1-59)

Once you’ve finished these readings, it would be a good point to take a break from reading and watch a short presentation on the nature of influence: Social Influence in Psychology: Theories, Definition & Examples. You can find it here: http://study.com/academy/lesson/social-influence-in-psychology-theories-definition-examples.html. Follow this up with the reading, “An introduction to influence” at http://workingpsychology.com/intro.html. Read from intro to persuasion, then come back here and continue on through the lesson.

These readings support the following course objectives:

CO-1: Recognize psychological theories underpinning informational/deceptive practices.

CO-2: Demonstrate the use of psychological tools in informational/deceptive practices.

CO-3: Deconstruct the concept of deception and its applications in different contexts.

CO-4: Evaluate the use of influence/deception techniques in historical cases.

—————————————————————-

Introduction

It’s a fast moving class for professionals who want to add to their skill sets or hone existing skills. This is indeed a murky arena of thinking and practice; however, it is not unfathomable. It does tend to challenge many who are comfortable with the more routinized forms of intelligence operations.

Here is a koan that points to the way we’ll likely wrestle with some ideas in this class.

Not the Wind, Not the Flag:

Two monks were arguing about a flag.

One said: `The flag is moving.’

The other said: `The wind is moving.’

The sixth patriarch happened to be passing by.

He told them: `Not the wind, not the flag; the mind is moving.’

Mumon’s Comment: The sixth patriarch said: `The wind is not moving, the flag is not moving. Mind is moving.’ What did he mean? If you understand this intimately, you will see the two monks there trying to buy iron and gaining gold. The sixth patriarch could not bear to see those two dullards, so he made such a bargain (Zen@Metalab n.d.).

Wind, flag, mind moves.

The same understanding.

When the mouth opens

All are wrong.

Much of what goes on in deception, propaganda, and disinformation shares in the thought behind this koan. There appear to be many things going on. Many look and see the flag — the obvious. Others sense the wind, though nothing can be seen. Some look beyond to other factors. Some look to the mind, but all can change with the output of the mouth (communication) which shapes new meaning, new activity.

Today, discussions of these topics are viewed by many as repugnant or worse. Perhaps you had that feeling when you saw the picture of or read the quote from Adolph Hitler. Throughout much of history, terms such as propaganda didn’t have negative connotations. That is a recent phenomenon that largely came about because of counter-propaganda efforts by the Americans and British. However, even if that’s enough to dissuade you, consider this. These practices are used routinely by many agencies and people — even private companies make use of persuasion practices. If you’re to learn how to thwart the denial and deception efforts of others, you will need to understand the principles and practices behind them. This is the primary reason for the course.

For a professional to improve his/her ability to cut through the denial and deception inherent to these practices, a greater understanding of psychology, sociology, communication, culture, history, and more is needed. Further, the analyst needs tools that help order “habits of the mind” and limit inherent bias that enables successful deception. That’s the focus of this lesson and the entire course.

Persuasion and its Many Aspects

We begin this week by establishing fundamental definitions, concepts, and related practices. For that reason, this is one of the most fundamental of all the lessons in this course. It’s important to remember that this course, despite elements that are very personal or individual in nature, is to be viewed from the perspective of a state (country) achieving national objectives. Thus, the tools provided in the course should be viewed from that perspective to achieve the best understanding.

Within this material, it’s important to remember that there is also a hierarchy of concepts. Influence is the broadest of ideas and covers all that we talk about. Yet, it’s so broad that it’s not very helpful for much of what we’re doing in this course.

Hierarchy of Persuasion

It’s important to recognize that many things are related to persuasion but not all of them are inherently deceptive in nature. This lesson explains elements of the first and second tier as well as related psychological concepts. In this discussion, a number of terms will be defined in order to reduce the confusion caused by the inaccurate use in the common vernacular. This will help make the discussion in the class more productive, because confusion should be reduced as we examine aspects of the third tier.

1ST TIER

2ND TIER

3RD TIER

Definition of Key Terms

PERSUASION INFLUENCE DECEPTION

This section discusses the nature of influence and the subordinate concepts of deception and information. It’s important to remember that many of the concepts discussed in this class look at human psychology and the ways it can be exploited or protected. In the case of deception, the exploitation normally uses “lies” of commission or omission. In other words, the deception is done through presenting other information as truth or by simply leaving information out. Yet, this arena can be a murky one with frequent overlap. To help increase your ability to work through this domain, let’s first look at the concept of influence.

Key Psychological Functions

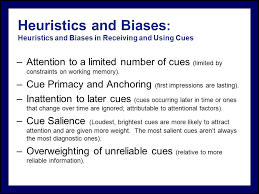

This lesson looks at two specific types of functions that are known to cause cognitive errors. The first are biases. The second are heuristics. The term bias or biases is likely well known to you. One may be biased against a category of people, a way of doing something, or a specific thing; however, unlike the media promoted ideas of bias, biases are not always negative. For example, there’s nothing wrong if you prefer rice rather than potatoes or westerns over mysteries. Nevertheless, biases will lead individuals to make decisions that by necessity leave out, ignore, or alter thinking in ways that might not otherwise occur. When considering intelligence collection, analysis, targeting, etc., biases may remove viable, even critical, targets unduly.

The second area involves heuristics. Heuristics are mental algorithms that speed thinking by focusing attention and streamlining analysis. For example, if you were asked whether you’d like fish or fowl for dinner, it’s unlikely that you’d go through the entire list of fish or birds that you know. For example, most people already have in mind what fowl they might eat. In the United States, that list would likely include chicken, turkey, and duck. More exotic eaters might include grouse, partridge, squab (young pigeon), etc. Nevertheless, it’s virtually impossible to find someone who included hummingbirds, ostriches, penguins, egrets, golden eagles, etc. when considering what to eat for dinner. That limiting process is the result of a heuristic that tells your brain there are limited variables to be considered. Yet, while this may help in making dinner decisions, it may impede efforts to determine what an opponent might do in a real-world, threat situation.

Key Psychological Functions

Arrow pointing down

Confirmation Bias

Confirmation bias refers to the tendency for people to select those elements that support their pre-conceived notions. Depending on how the information is handled it may also be called the Texas Sharpshooter Fallacy, cherry picking, “myside” bias, confirmatory bias, etc. Regardless of the name, the process involves mental efforts to eliminate any competing ideas and focus on those data points that support one’s case. One simply draws the proverbial bullseye around those data points that fit preconceived ideas.

Elections are an excellent place to look for confirmation bias. If you love Candidate Smith but despise Candidate Jones, you’ll look for information that supports your candidate and denigrates his/her opponent. Further, when things become too troubling, you might find yourself coloring that information to fit your biases. For example, if Candidate Jones barely squeaks out of criminal charges after an investigation, you might trumpet the vindication of your Jones. You might even trumpet Jones’ innocence and the abuse of power by those doing the investigation, even if he/she had many examples of troubling or questionable behavior come to light in that investigation. Conversely, you’d delight to see Jones’ being called out for kicking his/her neighbor’s dog. For you, this might be taken as clear evidence of how evil Jones really is. The bottom line is simple. Humans seek to be right. Thus, they’ll look for evidence to support their views, even if that means ignoring clear evidence to the contrary.

One of the easiest examples of confirmation bias to visualize is the Texas Sharpshooter Fallacy. Imagine a less than stellar shooter firing at the side of a barn. After firing all his/her rounds, the next step is to see how accurate they were. Now imagine the shooter circling the biggest group of shots with a bullseye and then putting concentric circles out from there. Success! Of course, not starting with the bullseye brings the shooter’s accuracy into question. Yet this is often how people approach analysis of issues. Begin with existing biases, desired outcomes, etc. Then find ways to pull them together into a credible package to show to others.

In the realm of intelligence, it’s easy to see where this could influence one’s analysis or actions. When someone “knows” the bad guy and what the bad guy will do, such an individual will be looking for confirmation of what is already known. That’s true for many people, even when there is clear evidence to the contrary. People like to know they’re right, and they like things that are easy. Viola! An answer with little analysis, based on assumptions. One such example can be seen in the Vietnam War. Many U.S. analysts looked at the North Vietnamese military leader General Vo Nguyen Giap through the lens of their training. As an Asian Communist, they “knew” that he must have borrowed his warfare theories from the Chinese Communist leader Mao Zedong. After the war, corrections were necessary. In fact, the underlying animosity between Vietnam and China as well as Giap’s European education meant that he favored Clausewitz and Jomini for his insights into conduction warfare. By the way, these were the two most predominant influences in the doctrine of U.S. land force doctrine and practice!

One of the best counters to this problem involves establishing criteria for analysis beforehand. Such criteria must be written out and available to others who review the final work. This increases the odds that both the originator and reviewer might catch problems in the analysis. Without established analytical frameworks and measures, it’s virtually impossible to avoid some degree of confirmation bias.

RELATED READING

Normalcy Bias

This problem tends to be most evident in high stress or crisis situations. Though there is often discussion of the “fight or flight” reflex in which humans either confront or actively avoid a problem or conflict, normalcy bias is the less discussed “hide” reflex. Many creatures exhibit this behavior. It can be a life-saving process when a creature relies upon its natural camouflage or superior position to avoid detection by predators. Unfortunately, this doesn’t help individuals trapped in a fire or other situations wherein the threat will overtake them.

The type of behavior caused by normalcy bias has often been attributed to injury and death in humans. For example, in aircraft fires on the ground, many survivors report other unharmed passengers sitting motionless in their seats or going about normal tasks, i.e. collecting their things, that were inappropriate for the situation. Seldom were these people among the survivors unless others interviewed. Mentally the situation overwhelmed their ability to process because they had never experienced or even considered such a catastrophic situation. Survivors from such accidents typically came from three groups. The first group had either received prior training and/or had considered the possibility beforehand and prepared a plan (Remember those boring pre-flight briefings by the flight crew?). The second group had been helped out of the burning craft by members of the first group or outside rescuers. The third and final group might be considered the “blind luck” group, because they were often ones who had fallen out, been blown out, or otherwise been removed from the situation through no effort of their own or others. Of course, normalcy bias doesn’t just come into play for aircraft accidents. It’s seen in many human interactions.

Open conflict such as fights, arrests, and combat also tend to trigger normalcy bias. If you’ve ever been in any of these situations, you know the responses triggered by your body and the way time awareness changes. You probably also realize how important your training and experience were in moving you through the process. For those who lack such experience, trust the rest of us! The human brain must process new situations, but not all situations are conducive to on-the-job learning. There are many combat systems that use a color scheme to represent this process. For example, military and police often use one that visualizes green as normal conditions, yellow as high alert status, and red as active threat/crisis. That’s simple enough, because similar concepts are seen elsewhere. However, it’s the final stage that is tied to normalcy bias — black. In crisis, people can easily go from green or yellow to black — the stage in which the mind shuts down or slows so dramatically that meaningful action is no longer possible. It’s at the black stage of mental processing in which normalcy bias is at its worst. It’s here that an opponent might capture, wound or kill you while your mind is processing options or trying to focus on those things you’re accustomed to for a lack of any decision making.

Normalcy bias can affect those in intelligence in a number of ways. Because humans seek to establish a normal state, any change to that can cause mental roadblocks to analysis. It may be as simple as slowing the process or as bad as “locking up” someone’s mental functions for a period of time. This can happen even outside of direct combat.

As has already been discussed, one of the best counters to normalcy bias is experience. This may come from actual experience or experience gained in training. This may also be “borrowed” from others by the use of simple devices like checklists — mental or actual. This is why you see most military organizations and some aspects of the intelligence community having checklists at hand. When the feces hit the proverbial rotating blades, it’s not time to start thinking from scratch. Even in analysis, one might run a checklist, analysis form or some other device that moves one step by step through the needed analysis action items.

Another device for overcoming the problems associated with normalcy bias is the practice of running worst case analysis or planning. If one considers what might happen next, that individual will be more likely to respond effectively if conditions change. This is somewhat true, even when the conditions don’t change exactly as predicted. As noted earlier, a good example of this can be seen in the testimonies of those in aircraft accidents. Many of those who survive often attribute their actions to thinking about the worst-case possibilities and the necessary actions to respond. Many of the remaining survivors attribute their survival to being pushed, pulled or otherwise directed by those who had planned ahead.

RELATED READING

Framing

When a person looks at a problem, it is seldom with fresh eyes (without bias). Not surprisingly, the new problem is viewed through the lens of past experience. That experience is linked to the current context of the problem and helps put the “frame” around what will be examined and how it will be viewed. As a rule, younger people have fewer points of reference to draw on and thus may be more flexible in their processing. As one ages, as long as cognitive faculties remain intact, one’s increased number of points of reference can increase analytic speed while also providing less biased results (Erber 2010; Peters, Finucane, MacGregor, and Slovic 2000). Although age alone isn’t sufficient for this, individuals must have training and life experience to draw on. Older or younger individuals without cognitive resources from training and experience are more likely to use emotional frames of reference (Watanabe and Shibutani 2010).

Because framing leads individuals to apply past practices and ideas, it is often linked to or used synonymously with agenda setting. Often the term agenda setting is used in the context of past media messaging that set the “agenda” for one’s thinking and actions. In this, there is normal a prioritization to what is to be accepted and what is to be rejected (McCombs and Shaw 1972).

One of the ways intelligence professionals have found to reduce the effects of framing has been to employ techniques like Red Team or Red Hat exercises that place them in the role of an opponent or other actor. When one is forced to think like someone else, it can easily highlight the problems that come from applying one’s own experience to that of others. The more distant the culture between analyst and target, the more necessary such efforts are to eliminate errors caused by framing.

RELATED READING

Priming

Priming is related but notably different from framing. Humans respond to their environment both physically and mentally. One of the most common aspects of mental engagement is called priming. Priming helps the mind focus on a specific schema (the way humans order/categorize the world, i.e. all cats purr and have tails, all chairs have four legs, etc.). Despite debate about how it works, there is clear evidence that humans tend to use the most immediate schema created by recent stimuli. It may be immediate because the person in question has just heard, seen or experienced something related. Nevertheless, the resulting mental activity is implicit, meaning it is not consciously recognized or processed.

A classic example might be seen in the famous Alfred Hitchcock movie Psycho. This movie includes one of the most famous horror scenes in western cinema. A shadowy figure with a knife attacks and kills a young woman in a hotel shower. Viewers continue to report an increased fear of attack while bathing/showering after viewing this scene. If you were one of those, you might consider actions you took — lock the door(s), check the window(s), consider routes of escape, consider means of defense, etc. If you did anything like this while still using your regular bathroom, the only thing that changed was awareness (priming) provided by said movie scene.

A common example of this becomes evident to many people when they make a major purchase. For example, when a person buys a new car, he or she may suddenly see the same type of car “everywhere”. They existed before the purchase, but there was no reason to focus on their presence prior to the purchase. Now they seem frequent and the purchaser begins to construct ideas about who buys them, why they buy them, etc. Both positive and negative priming that uses the most recent, relevant information by which to interpret events in the current environment. In the first, one uses negative priming cues about what bad things would happen in a situation like this. Notably, negative priming can slow mental processing. Conversely, positive priming can help speed processing time. In the second, the new buyer suddenly sees things that never evoked awareness before. With this new awareness, he/she might more quickly recognize more similar vehicles.

As might be seen from these examples, the priming effect tends to be shorter than the effects of framing (Rokos-Ewoldsen, Rokos-Ewoldsen, and Carpenter 2009). The more recent and intense primes will create stronger effects (Rokos-Ewoldsen, et al. 2009). Not surprisingly, once one is primed with specific concepts and the conditions influence one’s emotions, attitude and behavior changes will normally follow quickly and without conscious thought.

Though priming, like framing, tends to highlight or make salient a specific point, it doesn’t tend to provide specific evaluative or prioritizing suggestions like framing (Scheufele and Tewksbury 2007). Once opinions are set, there is a tendency for individuals to seek information that is consistent with their views. This can be present in both framing and priming; however, in priming this information tends to fit existing evaluative measures rather than providing the evaluative measures as in framing. The effects of priming in this way have been seen to affect evaluations of politicians (Iyengar and Kinder 1987; Sheafer and Weimann 2005; and Moy, Xenos, and Hess 2006). The way other genders, races, classes, etc. are perceived (Hansen and Hansen 1988; Oliver, Ramasubramanian, and Kim 2007). From these primes, people construct mental models to better understand the situation and in preparation for future events (Wyer 2004; Johnson-Laird 1983; Rokos-Ewoldsen, et al. 2009; Wyer and Radvansky 1999).

Analysts are constantly influenced in ways that might not be evident. For example, the subtle facial gestures, body gestures, change in tone or word use by managers and commanders may trigger thinking that is less than optimal for good analysis. Though leaders sometimes make it clear what they want an analyst to find, it’s often more subtle. Yet the human mind has been wired to detect and act on these cues. Studies show support for this. For example, using certain words triggered changes in audience response (Drane and Greewald 1998).

An essential counter to the problem of priming is self-awareness. Where are your blind spots, problem areas, personal biases, etc.? These are areas that priming is more likely to pass undetected. It’s impossible to deflect every prime, given the mass of information inputs in any given day; however, one can minimize the effect by increased analysis of inputs. The more emotionally laden the input, the more care is needed to analyze it.

RELATED READING

Availability Heuristic

Some estimates put the number of decisions made by the average American at more than 50,000 a day! Thus, it’s not surprising that the brain creates shortcuts to reduce the overall processing burden (Tversky and Kahneman 1974). Thus, mundane activities may be categorized or analyzed in ways that are not necessarily accurate. For example, in one study test subjects were asked to list six reasons they might consider themselves assertive. Subjects in another group were asked to list 12 reasons. Not surprisingly, more of the subjects asked to list six were able to complete all or most of the list. In contrast, those asked for 12 reasons did not complete their list. When both groups were asked how assertive they felt, the six reason group scored themselves higher. Evidence suggested they did this because they believed they had a more complete data set, even though those in the 12 group often hard more than six reasons to support their assertiveness.

Often those who use this technique to influence others will use more vivid or emotional content in their communications. They will also repeat key elements more. The result is the message is more firmly lodged in the targets brain. A classic example of this in interpersonal communication involves the communication of a “hunk” or “babe” with a target that “wasn’t in their league.” A touch, a wink, and some suggestions, might lead the target to “decide for himself/herself” to do exactly what is being suggested. Of course, there are many other examples, but the key is the impact of highly visual, emotive, and/or repetitive language from others.

In a professional setting, it is common for one to act on their more immediate recall. The easier it is to recall the potential benefit or penalty for an action or non-action (often reinforced by visual, emotive, or repetitious elements) will drive the decision-making process. Frequency may not always come from a single event. It can be assumed from the mind’s attempts to link seemingly related events of sufficient immediacy and impact (Tversky and Kahneman 1973).

Countering the problems created by the Availability Heuristic calls for actions found in dealing with framing and priming. One must know themselves and ask questions about what is being decided, how it’s being decided, why it’s being decided, etc. Consider who might have influenced you in the process. Was there a push from leadership or a friend?

RELATED READING

Anchoring

Framing draws on existing knowledge. Priming relates to recent stimuli, but anchoring relates to your very first impression. This is the tendency for humans to fixate on the first thing they see or hear. Those selling you things rely on this heavily. Ever seen the price tag that’s been “slashed” to give you deep discounts? That great Item X was $975 but now you can get it for only $375! Wow! How could you turn it down? Anchoring is at the heart of negotiations too. The first one to announce the price or other negotiating point has set the anchor point. Trained negotiators know how to work around this, but most people just stick to that anchor when they offer their counter-argument, if any. Yet, it doesn’t occur only in sales and negotiations. Here’s an example from David McRany’s You Are Not So Smart (2012).

Answer this: Is the population of Uzbekistan greater or fewer than 12 million? Go ahead and guess.

OK, another question, how many people do you think live in Uzbekistan? Come up with a figure and keep it in your head. We’ll come back to this in a few paragraphs (MacRany 2012, 215).

Humans are given things to consider every day. Analysts are no exception; however, these considerations are never made in isolation. Consider a situation in which you begin the day with a briefing from your intel manager. The emphasis of the briefing involves a new problem with Terror Organization X. You’re given information that shows some of this activity is taking place in your area of responsibility. What’s your likely tendency? Your leadership is interested in you finding something. Of course, you’re a professional and want to succeed. Thus, you have both organizational and personal motivations to start from this anchor point. This will help you look within a specific range for things. This could lead you to find or miss things that aren’t specifically connected.

Back to Uzbekistan. The populations of Central Asian states probably aren’t numbers you have memorized. You need some sort of cue, a point of reference. You searched your mental assets for something of value concerning Uzbekistan — the terrain, the language, Borat — but the population figures aren’t in your head. What is in your head is the figure I gave, 12 million, and it’s right there up front. When you have nothing else to go on, you fixate on the information at hand. The population of Uzbekistan is about 28 million people. How far away was your answer? If you are like most people, you assumed something much lower. You probably thought it was more than 12 million but less than 28 million.

You depend on anchoring every day to predict the outcome of events, to estimate how much time something will take or how much money something will cost. When you need to choose between options, or estimate a value, you need tooting to stand on. How much should your electricity bill be each month? What is a good price for rent in this neighborhood? You need an anchor from which to compare, and when someone is trying to sell you something, that salesperson is more than happy to provide one. The problem is, even when you know this, you can’t ignore it (MacRany 2012, 215).

In the realm of analysis, there are a number of counters to this problem. The key is to use techniques that evaluate your answers. Humans are too quick to accept their own answers, because they lack insight or are lazy or are arrogant or for many other reasons. Thus, one must use devices to constructively question their decision-making processes and final decision validity. Some recommended tools from the intelligence community include processes like Diagnostic Reasoning, Analysis of Competing Hypotheses, and Argument Mapping. This is not an exhaustive list though. There are many techniques and practices than can help in this area. Sometimes something as simple as getting a disinterested party to evaluate your conclusion can help in a pinch.

RELATED READING

Class Activity

As part of this week’s lesson, there’s a unique addition. As a way for you to experience how these different mechanisms may play out in a tense setting where rapid analysis is required, we’ve produced a “create your own adventure” scenario. In this scenario, you’ll be provided an initial briefing and follow-up information. Along the way, you’ll be required to make decisions. You’ll have multiple options, but you can only select one at each decision point. At the conclusion of your adventure, you’ll be evaluated as members of the IC and military so often are. Once you’ve completed the scenario, you can select “Reset” in order to try different actions. Please join us afterward in the forum for a follow-on discussion. Now click the button below to start the adventure!

We can write this or a similar paper for you! Simply fill the order form!